float Q_rsqrt( float number ) {

long i;

float x2, y;

const float threehalfs = 1.5F;

x2 = number * 0.5F;

y = number;

i = * ( long * ) &y; // part 1

i = 0x5f3759df - ( i >> 1 ); // part 2

y = * ( float * ) &i;

y = y * ( threehalfs - ( x2 * y * y ) ); // part 3

}

I found it on YouTube and, as my friend Nolan reminded me the other night, this algorithm is not new. The fast inverse square root shook the nerd world with its implementation in Quake III (1999).

Notice that it doesn’t use any division operator which is naturally slow on a digital computer; this algorithm speeds up computation of the inverse square root by 3x compared to conventional division and square root operations. The reason for writing it in C is evident in the first part, although these tricks also have analogs in often in other languages such as C++, Python, etc. and is thus implemented in those languages as well.

Part 1

It uses tricks inherent in the language to cast the address of float (aka a float pointer) to a long pointer. The importance of using a long is evinced in the second part because it allows for quick division by 2. Since standard binary numbers, unlike floats, are not disjointed numbers in base 2, a single right bit shift performs floor halving.

Part 2

This part is the real meat of the trickery in this clever solution. The second half I already explained in the first part but the first half involves rearranging the floating-point formula for a square root division to solve for scalar values. Recall that floating point numbers are comprised of a mantissa and an exponent. The actual digit number can then be composed of a formula of these two parts: (1 + M/2^23)*2^(E - 127) . The exponent is shifted down by 127 in IEEE 754 in order to represent negative values so 2^4 is actually 2^(131-127) where 131 is the number passed in the exponent. Since the mantissa needs to be between 1 and 2 in binary a 1 is fixed as the first digit before the point and the mantissa is divided by 2^23 since the mantissa is represented by 23 bits. Taking the logarithm of the above formula results in: log(1+M/2^23) + log(2^(E - 127)) . The trick to the problem is that log(1+x) ~= x + mu for small numbers (e.g. fractions less than 1). So this simplifies to: M/2^23 + mu + E - 127. Rearranging results in: 1/2^23 * (M + 2^23*E) + mu - 127 which is much more useful because the bit representation of a floating point number (M + 2^23*E) is included. Recall that the binary number for a floating number is 8 bits for E followed by 23 bits for M.

Now that the background is setup, we can continue calculating 1/sqrt(x), or actually 1/sqrt(y) in this case. With the rearrangements above, it becomes much easier to calculate the logarithm of a floating point number. So log(1/sqrt(y)) = log(y^(-1/2)) = -(1/2)*log(y) . So if we want to solve for some G s.t. G = 1/sqrt(y) where log(G) = -(1/2)log(y), we can substitute in the above rearranged logarithm formula for a floating point number and solve for the bit representation for G which ends up as: M_G + 2^23*E_G = (3/2)*2^23*(127 - mu) - (1/2)*(M_y + 2^23*E_y). Hence, the first term is that bizarre hexadecimal number and the dexter term is the subtracted single rightshift to halve the number.

The reason for casting the floating point y to a long should be fairly obvious; we need the hard-coded bit representation of y to be treated as such, not as a floating point number which has different arithmetic operators.

Part 3

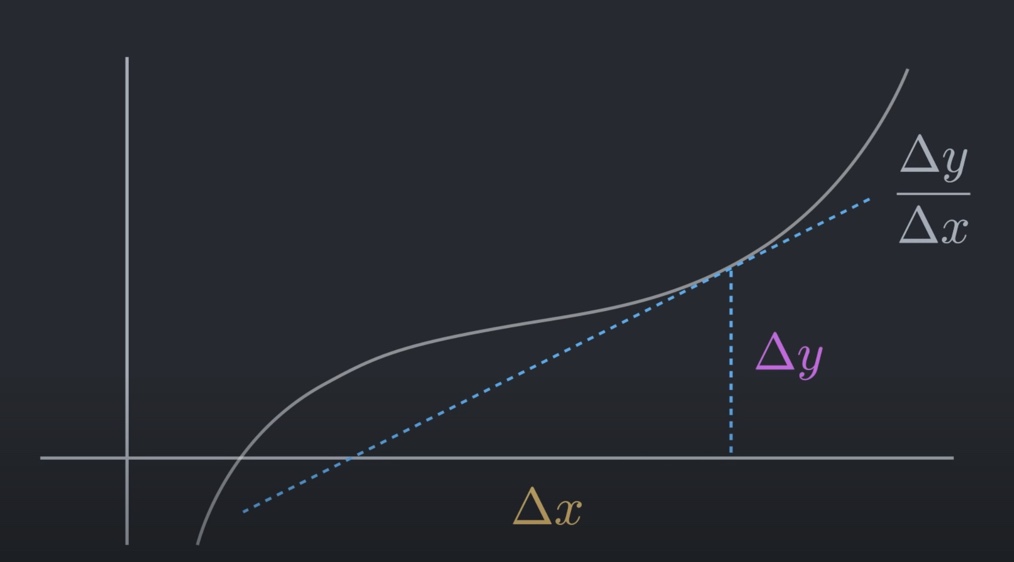

The third step is a little esoteric but not as technical as the second step. As with everything on computers, the numbers computed prior to this step are just an approximation. Due to all of the assumptions previously made (e.g. that the halving above isn’t floored for an odd number, that our chosen mu is accurate, etc.), we need to correct by some amount. Cue the Newton Iteration, which computes the error of x from a root for the function y. For this problem, the function is f(y) = 1/y^2 - x which rearranges to y = 1/sqrt(x) when y is a root (e.g. f(y) = 0). We need to compute x_new = x - f(x)/f’(x). Breaking down the derivative of a function: the derivative is described by the tangent line and where it intercepts the x-axis, composing the triangle below:

Since the ratio of the shifts times the shift in x equals the shift in y, the shift in x can be solved for as the shift in y divided by the ratio which ends up as: f(x)/f’(x). The actual implementation details of this aren’t made clear by the video, nor is the function derivative computed and I’m quite uncertain about which variable we take the derivative from, x or y. Nonetheless, it results in a static expression without any division operators required.